ELT Pros On Linkedin | Video Library | The Library | ELT Buzz News | TpTs

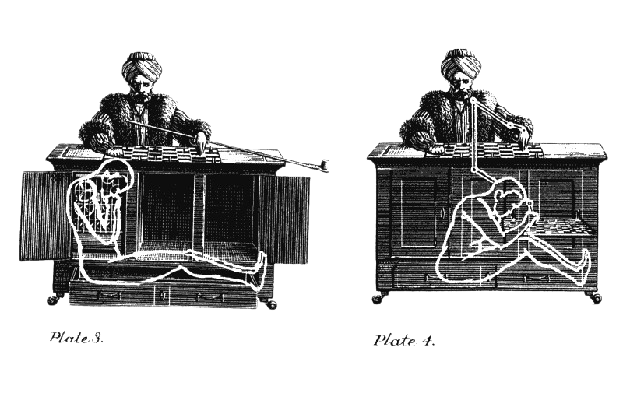

Have you heard the story of the “Mechanical Turk”? No, not Amazon’s crowdsourcing marketplace but its namesake.

In the late 18th century, it was an Automaton Chess Player that defeated all the best chess players and famous people of its day. Everyone was fooled, emperors (Napoleon famously swept the players off the table in disgust) and laity alike.

It was a deception machine. There was no real “machine thinking” going on, rather, a person hidden underneath played, worked the magic.

[see my short video story on this topic, also many posts on my personal blog]

It’s an appropriate metaphor for the AI washing that is happening constantly, even as I type. An overpromising of what AI is and a public deception to garner investment and business market capture. Let me explain, as far as education is concerned - my field.

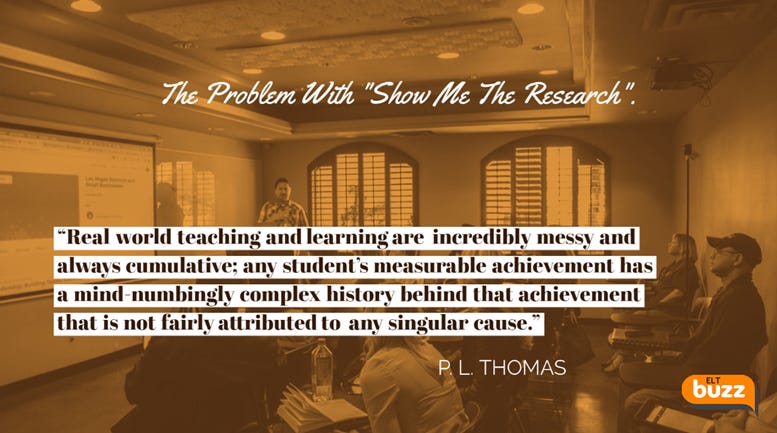

AI-washing is using a general-purpose AI tool as one’s own, for the sake of incorporating hype-generating AI lingo into their “success” and marketing messaging. I see it daily in my newsfeeds and messaging ranging from teachers making their “unipurpose” chatbots to ed-companies spouting how they’ve embedded AI into all their learning systems and practices. I’m sure all this spring’s ed conferences are full of this AI bluster. It’s deception, plain and simple. It’s another con like the once tossed around monikers of mobile learning, MOOCs, spaced-repetition, personalization and so many more that have come before.

I’ve worn many hats and one of them was running a large educational tech company. I’ll be publishing and confessing more about that soon. But trust me, so many with only dreams of dollar signs - want you to drink the AI kool-aid. Don’t. Just say no.

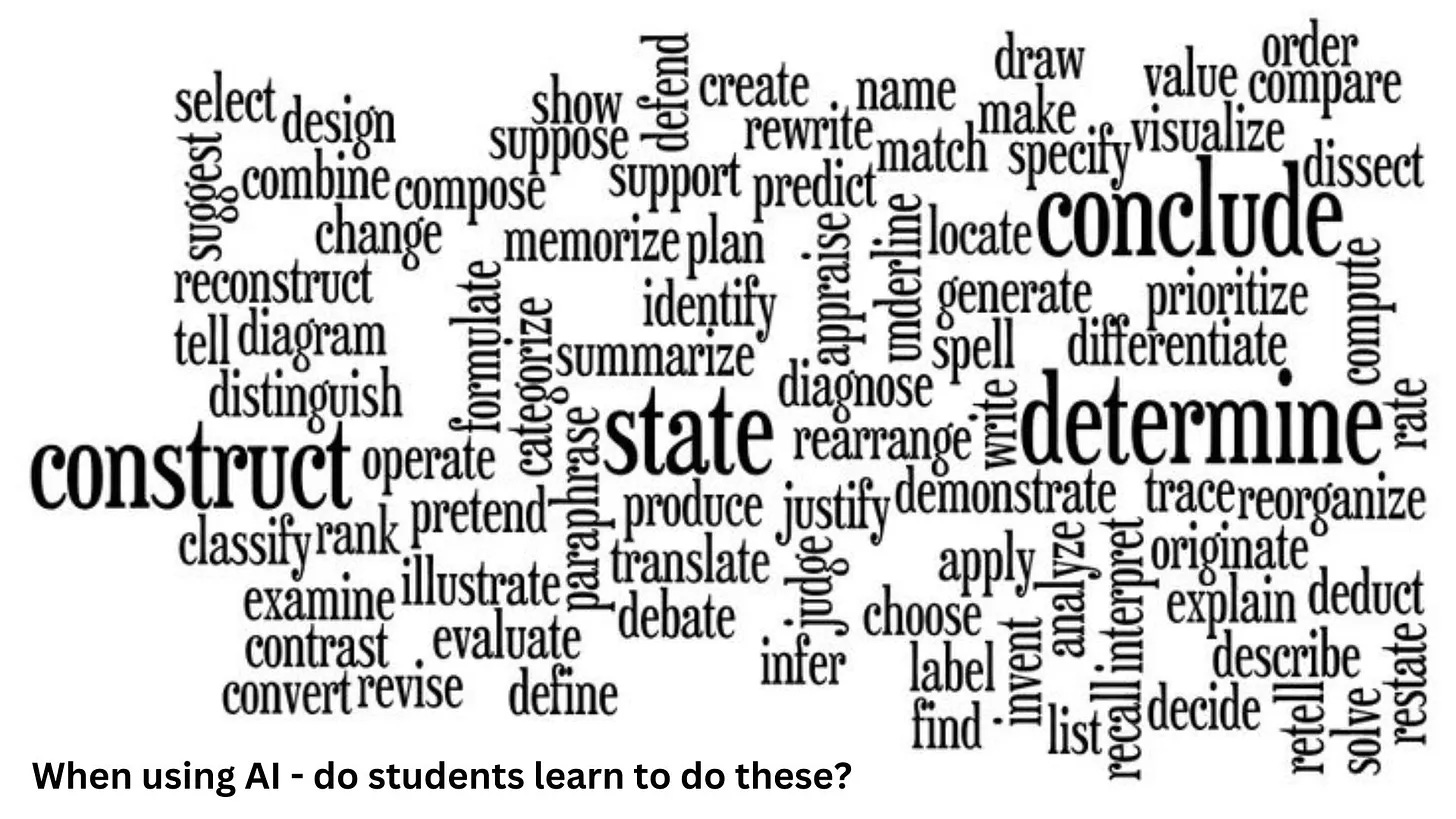

Now, for mechanical processes and curation - AI does have a place. It can “crunch” text quickly and generate lists, excel sheets, data. If the process doesn’t involve cognition - let AI do that lifting. But if what you want done does indeed entail thought, cognitive work - don’t take the shortcut of ChatGPT or AI.

Let me lay out why, I’ll try to be brief. I’ve elaborated on all these points in other articles on this blog or my personal blog. Also, see this insightful discussion of teachers.

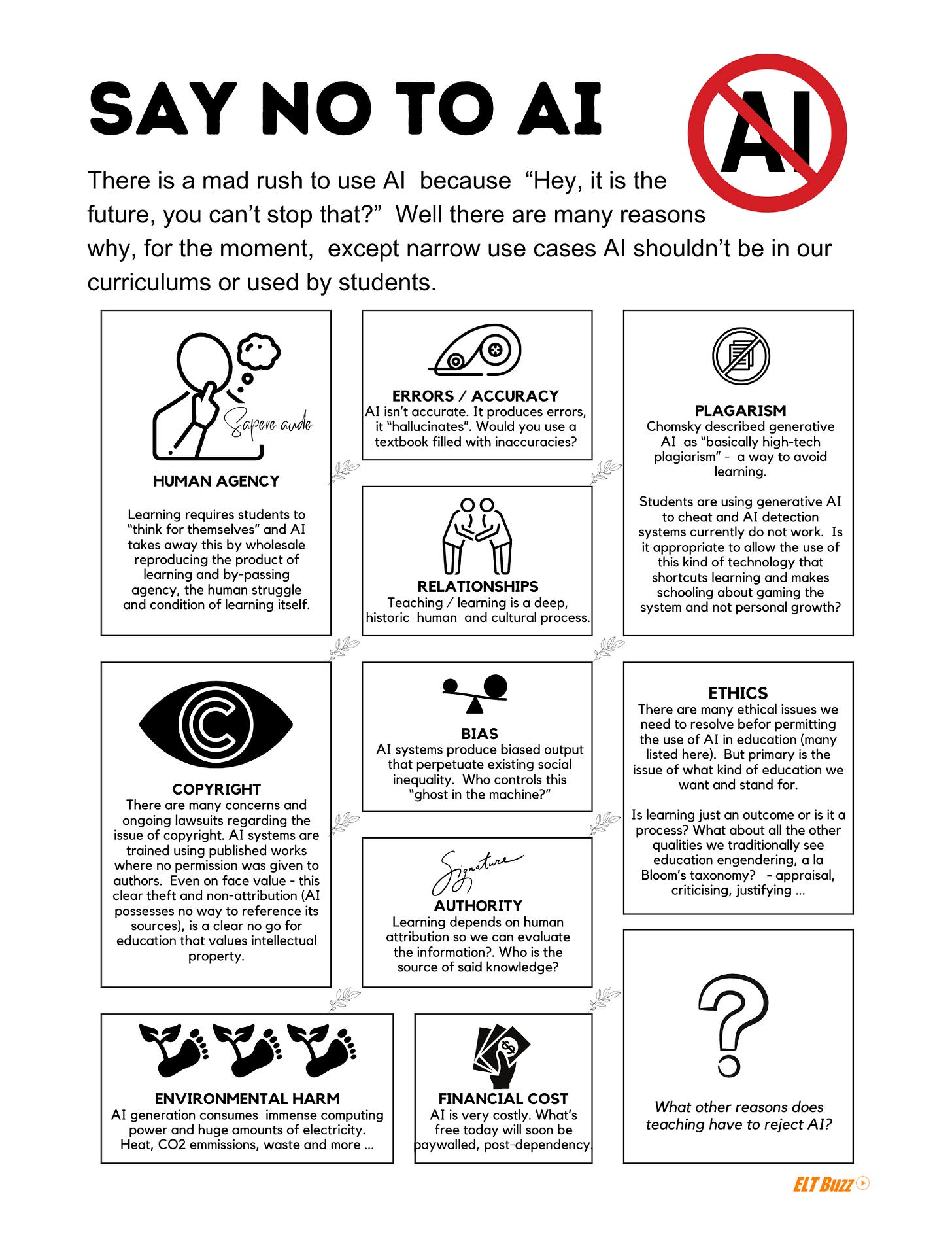

Agency. Think for yourself. “Sapere Aude” should be our motto for education in this AI age. Learning is a process, a crooked one with many variables but central to all those variable is the notion that the learner has the courage to use their own reason. Not follow that of another, be it a teacher or a book or AI. The gears upstairs need to be a’ grinding. The most precious gift we can “teach” and engender our students is this ability to keep the light on upstairs, to think for oneself.

Language. In my field, English language teaching, it is so important to have students encounter the richness, the variety, may I say, so human creativity of language. Generative AI in its logical (mechanical) deductive processing of text - produces pap, highly academic, processed food like language. We do a disservice to our students, if we start making this a staple of their language diet.

Plagarism. I see many educators calling for a “responsible” use of AI and that with enough TLC, students will learn to use it responsibly. Maybe some but I’ve been around the block long enough to know most won’t. Our culture has slowly positioned schooling in the corner of “get it done”, as something that is just a mark and a “pass Go, collect your piece of paper”. It was why I became so disillusioned with my own graduate students and pre-service teachers - their constant refrain and chorus of “Is it on the test?” belies a schooling culture that will embrace the “get it done faster” mentality of ChatGPT. No matter the cost to our learning, or the end product. Turnitin conservatively estimates 10% of student papers are AI written or assisted. And despite hype that detection of AI may be possible - all those familiar with the field say it is impossible to reliably detect if AI was used in student writing or on student tests. And that’s a big problem - that AI was let loose on the world without any reliable tagging and way to detect or fingerprint its output. I believe this was done purposely - another reason not to trust those building the AI yellow brick road. The wizard behind the curtain has other motives.

Errors. ChatGPT just can’t keep its pants up. I’ve used it extensively in so many environments. Be it “hallucinations” or just plain “lies” - why would educators want to place this inaccurate riddled trickster in the hands of students is beyond me. Imagine if a textbook just had one or two errors in it - would we use it in our classes? Why do we give AI a pass on this? (that’s a deeper question - worthy of a future post). I mean, it is laughable - ChatGPT can’t even count.

Equity And Inclusion. This is central and so important to education. AI will and has already drastically cut into educational equality. Differing access to AI and training in its use will further erode public education and major gains in equity in our schools. The AI marketing teams shout how AI will actually decrease any achievement gaps but yeah - we were sold this several other times in the past decades. Technology has overall been one of the biggest villans in stretching the achievement gap and dampening equity at school.

Copyright. Generative AI is / was and continues to be trained on vast amounts of unauthorized data. It’s responses are born of the soil of others and unacknowledged, uncited. AI is burdened by many lawsuits. Does it value the same things we in education profess - the sanctity of individual creation? Ask yourself that. Inevitably, the answer will be No.

Environmental Harm. It’s enormous, the electricity needed to power the miles and miles of server farms heating up planet earth. It’s mind-boggling how damaging AI truly is. All in the name of “advancement” and audience capture. Most tech gurus are betting on our future, betting big on it. They believe the energy costs will come down in time, they’ll crack that. Do you believe them?

Human Harm. We are back to the mechanical turk example. AI’s “ghost in the machine” is its 10s of 1,000s of low payed workers training the AI, labeling and translating, correcting and under the table, making it all seem like magic. And then we have the even great issue of human labor. How AI is replacing human labor, the result being cheaper, low-quality, humanless services and skills. It’s a huge issue. If you use AI, you are indeed supporting this race to the bottom. We are already feeling the pain in education - in publishing, editing, translation and even teaching itself.

The Financial Cost. Free is the buzzword. But AI won’t be free for long. Once they get you in, they’ll make sure there is no other place for you to go and then - up will come the paywall. AI companies are running up huge debt in expectation of future harvesting and payoffs. We saw this with Web 2.0 and how these new online tools and collaborations would transform education? Has it? I suggest no, there simply is no evidence anyone is getting smarter - but a lot of companies sure have gotten richer. The AI bill will be due soon. Do we want to spend billions of educational dollars when there is a cheap alternative - students thinking, creating, talking, learning together face to face? What a deus ex humanus that would be!

Bias. AI is a human construct. It has a huge amount of inherent bias built in. Not to mention the bias just from being the birthchild of so many white-bread Stanford, MIT, Harvard go boys. The bias is really deep and we are just getting a small handle on it. Do we want a one way highway, a vanilla road for our students to travel their learning road? Plus, what about the backdoors (admitted) whereby the AI can be manipulated, changed, tweeked? Are we selling no just our souls but our future souls?

Ethics. It’s a question of what we believe education should be, should entail and look like. I find a start line drawn between educators on the issue of AI. Many say, “Hey, leave those kids alone.” They’ll figure it out. It’s a bright future out there with X tool, Y ability of AI. On the other hand there are educators who are cautious about the social, cultural, philosophical and moral implications of using AI “to learn”. Do we really learn? What are the issues related to privacy, student data, sharing? Is there a way of schooling where there is less harm?

Relationships. Lastly, whatever education is, it is about people. Not pushing buttons and poof! I know! Cognito ergo sum. I think therefore I am. But beyond the private inner cognition, education is also a public and inter-personal process. We learn from others, learning is a cultural edifice. Think of all the teachers in your life, who’ve contributed to your own state of learning - how empty it will be in a future where ChatGPT is the only one you can thank.

Share this post